|

FRT - The

Game-Changing Technology

Facial Recognition: A Strategic Imperative for National Security

An abundance of data is overwhelming

intelligence analysts and law enforcement officials across the globe. Without

immediate action to get ahead of this challenge, our national security will fall

behind.

In recent years, the intelligence and law

enforcement communities have struggled to extract actionable and accurate

investigative information from a constantly expanding database of image

evidence.

Additionally, the sheer number of malevolent actors on the web, especially the

dark web – coupled with the rapidly expanding use of video – exacerbates this

investigative challenge. As the volume of information balloons and the types of

nefarious activity increases, the limits of current systems to analyze data and

extract meaningful insights have already been reached.

This data problem will only increase. Cisco

recently projected an annual 4.8 zettabytes of internet traffic by 2022.

This presents real and fundamental challenges to the law enforcement and

intelligence communities that must collect, analyze, store, and retrieve data to

counter diverse national security and criminal threats. Hiding within these

troves of information are photos and videos that contain identities that may

threaten the national security of the United States and other ally countries.

Our adversaries seek to hide within these data stores and conceal their

malevolent intent at the same time.

This data challenge sets up an international game of digital Hide and Seek for

the intelligence and law enforcement communities. Losing this game would

undoubtedly have stark, and quite possibly disastrous, consequences. Winning

will require nations to win the race to develop superior AI, machine learning,

and computing resources. Biometrics, and specifically facial recognition

technology (FRT) provides a game-changing technology to detect threats

immediately and verify identities with near-certainty. Given the vast volumes of

data that includes either photos or videos, the legally authorized utilization

of FRT is likely to become one of the most strategic tools to support our

national security posture.

Face recognition has been an active research and development area for more than

fifty years and commercial systems began to emerge in the late 1990’s. Over the

past twenty years, FRT accuracy has improved a thousand-fold; algorithmic

approaches applied to portrait style images now recognize faces significantly

better than humans can and are able to analyze data sets with greater speed and

scale.

This paper will address some of the obscurities frequently associated with face

recognition; provide a technical assessment of the current state of the

technology; and evaluate recent claims that China and Russia are accelerating

FRT capabilities beyond those currently available to the United States and

partner countries.

Face recognition and public acceptance

FRT

entered the public consciousness with the Hollywood surveillance film genre.

Television crime shows have also played

a major role in introducing the public to

FRT. The dramatic representation of the technology’s capabilities on screen have

distorted public perceptions dramatically. Many now believe that cameras in

public spaces record our every movement, and that video of past activities can

be recovered and matched instantly to individual names and dossiers on demand.

Unsurprisingly, the privacy community has become greatly concerned, especially

with video surveillance married to face recognition technology. FRT

entered the public consciousness with the Hollywood surveillance film genre.

Television crime shows have also played

a major role in introducing the public to

FRT. The dramatic representation of the technology’s capabilities on screen have

distorted public perceptions dramatically. Many now believe that cameras in

public spaces record our every movement, and that video of past activities can

be recovered and matched instantly to individual names and dossiers on demand.

Unsurprisingly, the privacy community has become greatly concerned, especially

with video surveillance married to face recognition technology.

At the same time, however, consumers have embraced FRT. Off the screen and in

our daily lives,

Apple’s iPhone

X, released November 2017, introduced most consumers to FRT.3 A face scan

and match, used in lieu of password or fingerprint, unlocks the smartphone.

Despite its incredibly high price, the popularity of the iPhone X has driven

adoption significantly.

Because of convenience, usability and security benefits, consumers have embraced

the technology and Apple has extended it to other product lines. FRT is cropping

up in many application areas such as Facebook and finding greater public

acceptance.

The U.S. Department of Homeland Security has started piloting projects that use

FRT for

international air departure tracking, which is starting to show real promise

in addressing some persistent immigration challenges. But this and similar

government applications of FRT have agitated the

privacy community even though these systems are using images that have long

been on file for use by customs and immigration authorities, e.g. passport

photos and Visa application photos. Data has shown that 98% of citizens happily

participate, and public reaction has been overwhelmingly favorable.

Amazon’s Rekognition product has also attracted the ire of the civil libertarian

community. Many groups have

demanded Amazon cease selling the technology to government agencies, due to

their perception that the technology has unacceptable performance differences

across demographic groups, genders, and age cohorts. This is despite significant

documented performance improvement in comparison to the current practice of

unaided human screening.

How well does the face recognition technology work?

The U.S. National Institute of Standards and Technology (NIST) established an

ongoing one to one image (1:1) matching algorithm evaluation program in February

2017 and has published 18 different reports evaluating different vendor’s

performance as of

January 2019. Only four algorithms were submitted for the first round of

testing. Best FRT performance using Visa images demonstrated a false match rate

(FMR) under 0.000001 (1e-06), and an approximate 92% match accuracy. In January

2019, NIST evaluated 100 algorithms, and the best algorithm performance jumped

to 99.6% accuracy. All the algorithms from the first round of testing have been

retired and replaced with improved algorithms. The NIST testing reveals very

rapid progress occurring in 1:1 face matching performance.

NIST has also been conducting

one to many image (1:N) performance testing in three phases—two of which are

complete with interim results published— using a 12-million person gallery that

contains multiple images for many individuals. This gallery size is nearly an

order of magnitude larger than previous galleries and is therefore much more

representative of typical government identification use case requirements.

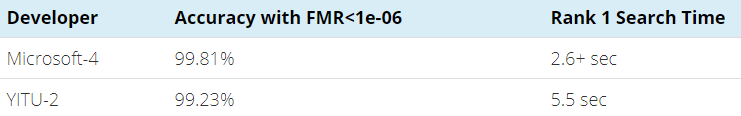

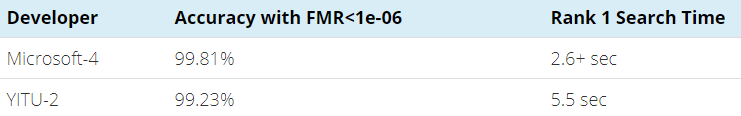

For Phase 1 of 1:N NIST testing, 31 organizations submitted a total of 65

algorithms for evaluation. Only 16 algorithms were able to work with large

galleries. Rank one match accuracy on the 12-million-person gallery ranged from

95.1% to 99.5% with only two algorithms demonstrating less than 98% match

accuracy.

For Phase 2, 38 organizations submitted 127 algorithms. Only 26 worked with

large galleries; but for these the match accuracy ranged from 95.1% to 99.8%,

with 11 algorithms exhibiting better than 99% match accuracy, and another 10

with better than 98% accuracy.

The Bottom Line

Recent tests demonstrate that FRT algorithms have come a long way in both 1:1

and 1:N match accuracy. In each category, under controlled testing environments,

multiple vendors have been able to achieve 99+% accuracy. While important, these

test results do not provide a complete picture of FRT performance under the

real-world conditions facing law enforcement agents and intelligence analysts.

Probe images from operational cases are rarely of “portrait-style” quality and

other environmental and operational considerations present complicating factors

for 1:1 and 1:N matching accuracy. Therefore, operational performance of FRT

algorithms should include both controlled and operational testing environments.

Addressing face recognition criticism

No responsible discussion of FRT would be complete without a careful

consideration of concerns raised by privacy and civil liberties advocates. We

recognize that individuals expect clarity around how the technology may be used.

Rather than enumerate the range of concerns highlighted, we provide some

high-level guidelines we think are important for responsible FRT deployment:

FRT

technology should only be utilized when there is an appropriate, compelling, and

lawful use case and then do so following best practices. For government

agencies, this requires documenting privacy threshold and impact assessments. In

addition, government agencies should avoid over-collecting images from innocent

people beyond what is necessary to achieve national security or law enforcement

imperatives, develop appropriate policy implementation guides, enforce adherence

to intended use of the technology and finally, protect and safeguard data

following well-defined, appropriate retention rules. FRT

technology should only be utilized when there is an appropriate, compelling, and

lawful use case and then do so following best practices. For government

agencies, this requires documenting privacy threshold and impact assessments. In

addition, government agencies should avoid over-collecting images from innocent

people beyond what is necessary to achieve national security or law enforcement

imperatives, develop appropriate policy implementation guides, enforce adherence

to intended use of the technology and finally, protect and safeguard data

following well-defined, appropriate retention rules.

1. FRT technology should be regularly refreshed to align with the current state

of FRT capabilities. Employment of current technology will help overcome ongoing

criticisms around ethnic and age bias of FRT technology. Many federal biometric

systems utilize FRT that have not been significantly updated for nearly ten

years. Old and obsolete FRT algorithms may demonstrate ethnic bias in match

rates that would be addressed by upgrading to current FRT with inherently

improved algorithms.

2. Professionals who interact with FRT systems should be adequately trained,

qualified and routinely proficiency tested to ensure the continuing accuracy of

their decisions. Many people look alike which can lead to misidentifications

that decrease the overall confidence in FRT for both investigatory and

prosecutorial purposes. Adequate training and qualification and ongoing

proficiency testing will allow agents and analysts to maintain quality standards

and technology limitations.

• The human brain is naturally inclined to see a face as a “whole” making it

non-natural/difficult for a non-trained person to focus their examination on the

small and unique features/details that assist in accurately identifying or

eliminating especially where there is change due to aging or injury, differing

angles or poses, or poor-quality images.

• Humans also tend to have more difficulty conducting facial comparisons of

people of races different their own which is exacerbated in people without

appropriate training.

• And lastly, humans without proper training over-estimate their ability to

accurately conduct such comparisons and do not understand how concepts such as

confirmation bias or contextual bias can affect decision making.

This last point is critical: despite portrayals on television and in films, the

vast majority of FRT applications still require trained human operators to make

final identification determinations. Current utilization of FRT tools help to

expedite the review of video evidence, but do not make final decisions regarding

human identification. Current systems generally provide a list of identification

candidates to trained operators for further adjudication. Any inherent

limitation or bias within the software can produce an improperly ranked

candidate list, but the actual identification of an individual is ultimately

determined by the facial examiner performing the adjudication.

For example, the New York Police Department has become increasingly vocal

regarding the appropriate use of FRT. The NYPD’s Real Time Crime Center (RTCC)

oversees the Face Identification Section where face recognition algorithms are

applied to images and video obtained from crime scenes to help investigators

identify potential suspects. Trained facial examiners review hundreds of

candidate photos when attempting to identify an individual, corroborating video

or photo evidence with other photos of the potential suspect sourced from

previous arrests, social media posts, or

other databases available to the investigators.

The Chinese and Russian FRT menace

In a growing trend, algorithms from YITU Technology in Shanghai, China have

turned in top performances in NIST’s 1:1 match tests in recent years. NIST

maintains an “FRVT Leaderboard”—at present, nine of the top ten performing 1:1

algorithms were developed by Chinese or Russian companies. Similarly, seven of

the top ten highest performing algorithms in the 1:N NIST testing (page

7) came from China, two from Russia and one from Lithuania. This includes

the top performer Microsoft, which many analysts believe, deployed a Chinese

developed algorithm.

A superficial assessment, looking only at top performing scores on the most

demanding test, has led some to believe that China and Russia now dominate FRT

technology.

As many existing uses of FRT affect government national security and public

safety programs, there is growing concern at the prospect of adversary foreign

powers potentially dominating this technology.

One reason cited by industry analysts is the relative ease with which Russian

and Chinese researches can access large volumes of annotated images.

Unencumbered by the privacy advocated by Western governments, Chinese and

Russian researchers can feed artificial intelligence (AI) systems a growing

network of surveillance sensors and government databases of personally

identifiable information to accelerate the machine learning process. In Xinjiang

and other regions experiencing heavy-handed police tactics, these AI-based FRT

systems are further aided by continuous feedback loops from large police forces

actively confirming or refuting the accuracy of face recognition hits.

Recently, multinational companies—notably IBM—have stepped in to support the

evolution of FRT algorithms that adhere to Western standards by releasing

large volumes of annotated images to the open source community. These firms

hope to develop a data baseline for the development and testing of face

recognition algorithms that is deliberately unbiased. Yet the training data

available to western developers, measured in millions of subjects, pales in

comparison to Chinese holdings which approach their national population and

appears to encompass large holdings of Western faces as well.

Along with better understanding and capability in the analysis and matching of

faces comes insight into how to potentially defeat our public safety and

national security systems. We must never forget that in a few hours on Tuesday

morning September 11, 2001 just 19 men killed 2,977 victims, injured another

6,000+, and did more than $10 billion in infrastructure and property damage.

While many of the hijackers, and their images, were in investigative files; we

then were unable to connect the dots to prevent the tragic events of that day.

Our analytic capability is vastly better today, but our systems must recognize

adversaries when confronted.

The entirety of the United States government must do its part to help protect

our national competitiveness and intelligence/military superiority in the field

of FRT. Failing to do so will cede technological and intelligence-driven

advantages the United States possesses today.

Operational considerations: algorithms are only part of the picture

Given the importance of NIST test results for competing vendors, it is no

surprise that developers tune their algorithms to perform best under specific

testing conditions. And while these testing conditions are relevant to the

myriad applications for FRT, they do not replicate the true operational

conditions facing agents and analysts in the field. For example, the top two

performers in NIST’s recent 1:N testing (pp

40 – 41) had accuracy results >99.2% but required search times of >2.6

seconds.

The incremental increases in accuracy for the top

performers do not outweigh the operational considerations for real-world

applications, e.g., border crossings where timely throughput is a critical

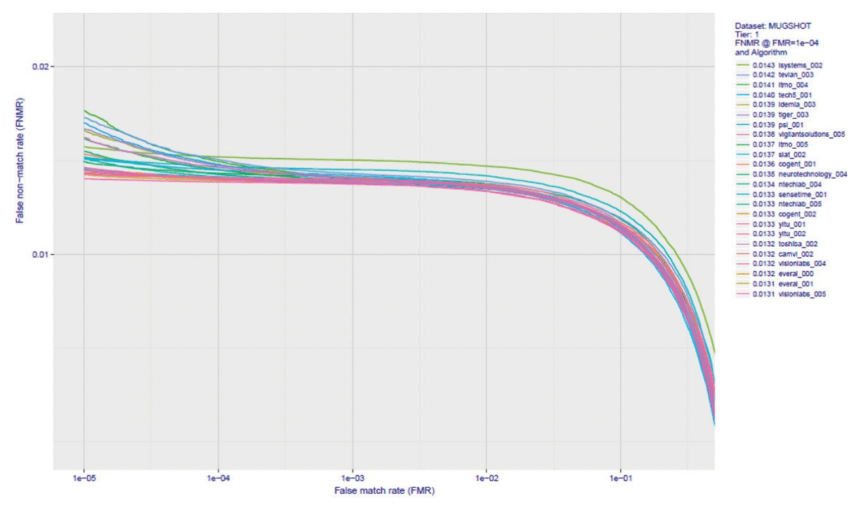

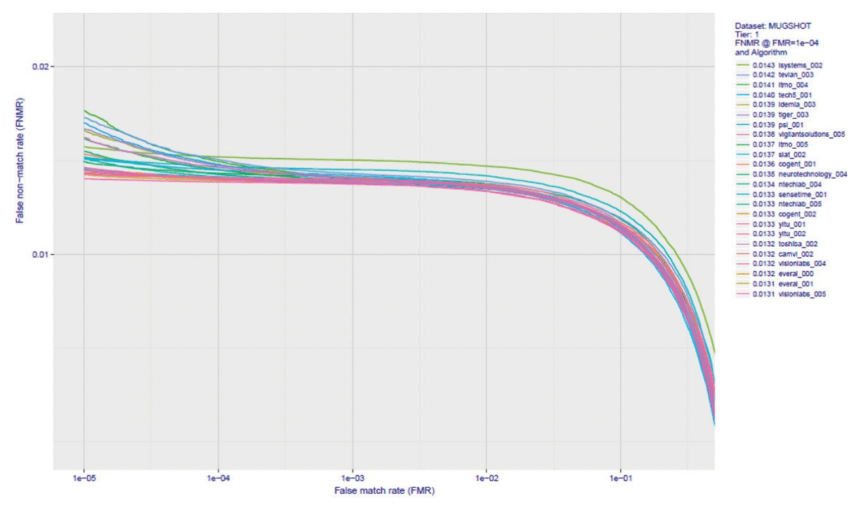

requirement. The following set of detection error tradeoff curves for the 24

best-performing algorithms for mugshot matching clearly shows how tightly

grouped algorithm performance (page

38) has become. Bearing this in mind, performance criteria, other than

matching algorithms, becomes significantly more important in choosing

operational FRT systems. Systems that support operations, implement agency

specific policy, provide effective operator dashboards, interoperate seamlessly

with other agency systems and activities, and more become more important than a

small incremental gain in matching accuracy.

Public safety and investigative applications

Ideal testing conditions rarely exist in real-world FRT applications. In public

safety and lawful surveillance applications, cameras are seldom positioned to

obtain portrait style images that form the basis of the NIST testing described

thus far— cooperative single subjects with ISO/IEC 19794-5 conformant

full-frontal images and mean interocular distances from 69 to 123 pixels. In

real-world applications, pose, illumination, expression, aging, and occlusion

become significant issues.

Algorithms are just part of a system. For face recognition, the camera itself,

its positioning, and lighting set an upper limit on system performance. Consider

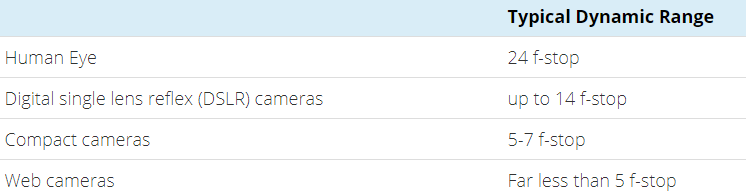

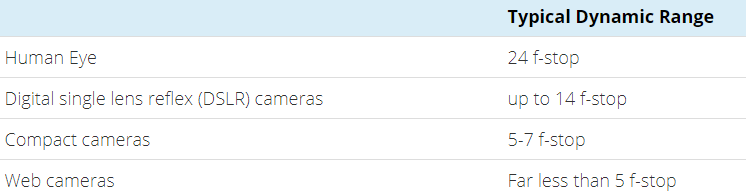

the following for

dynamic ranges of commercially available cameras in comparison to the

human eye.

The use of quality cameras is essential and due to

the advance of technology such cameras are affordable. Many older cameras really

should be replaced.

Lighting conditions are well known to have a significant impact on face

recognition algorithm performance. For example, the limited dynamic range of

both digital and film cameras will sometimes “wash out” darker complexioned

subjects against high reflectance backgrounds, rendering many FR algorithms

ineffective. Ambient or artificial lighting also has an enormous impact on

system performance, while very few (none that are commercial) work in the

infrared. At present, there is no comprehensive body of testing from which to

draw performance impact conclusions.

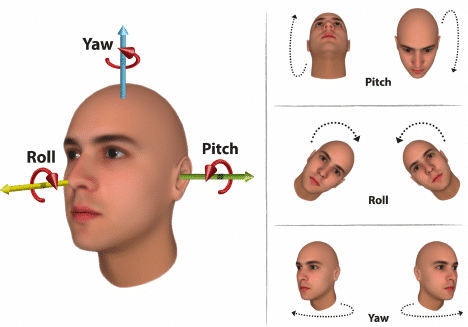

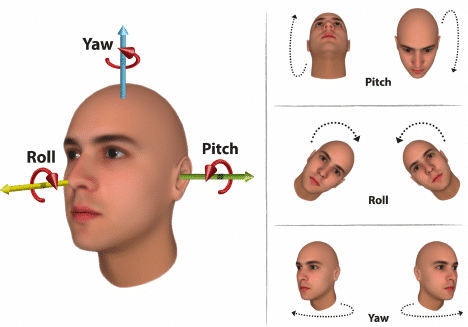

Pose is another operational challenge. The head of a stationary body has three

degrees of rotational freedom about the x, y, and z axis commonly termed pitch,

yaw, and roll.

Each has an impact on FR algorithm accuracy.

Recent independent testing has not addressed the impact of roll and pitch.

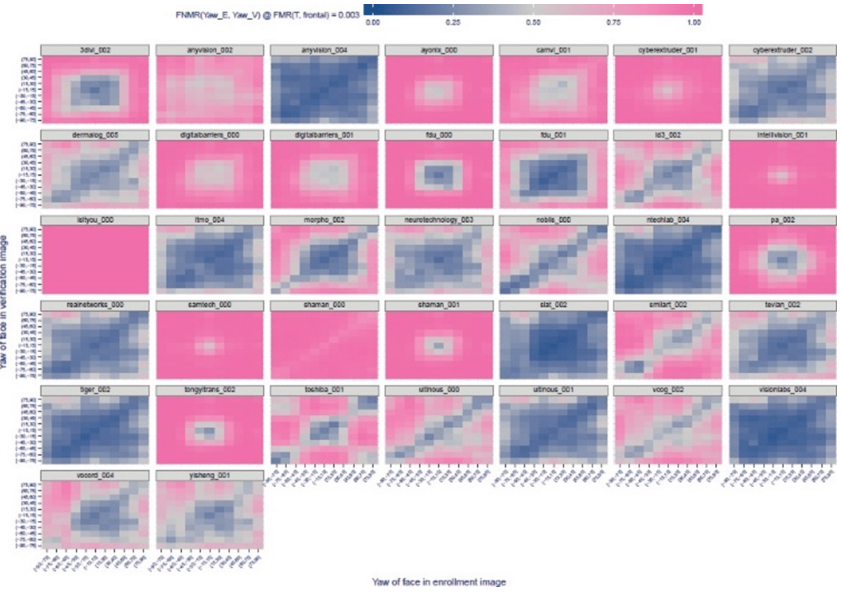

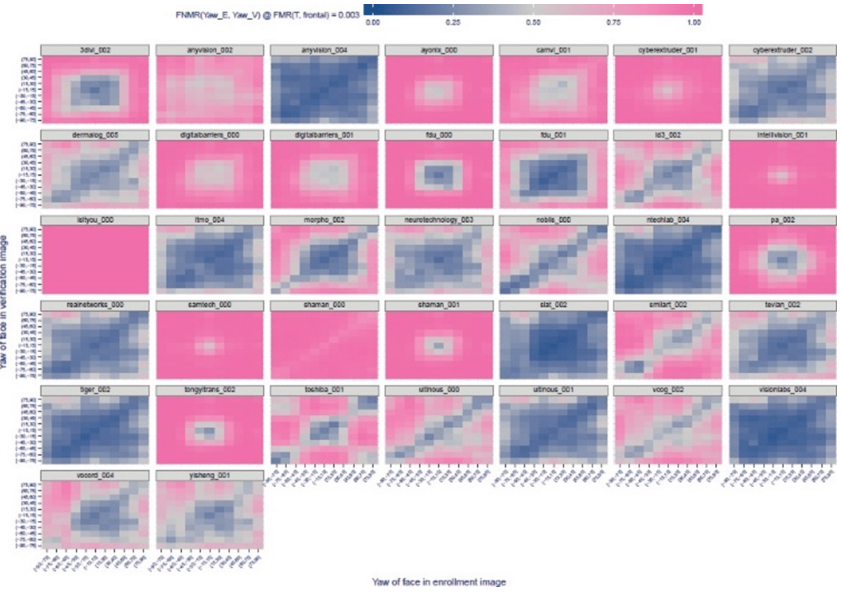

However, January 2019 NIST 1:N testing (page

50) results for yaw, that is rotations of the head to left and right, reveal

a significant impact on false non- match rates for many algorithms. Darker is

better in the figure below:

Head pitch was found to have a major adverse

impact on FRT performance in previous testing as well; the performance impact on

current state-of-the-art algorithms is unknown, however.

Aging is another important factor facing operational FRT applications,

specifically the ability to discriminate against individuals at different ages

and imposters.

NIST testing reveals that nearly all existing algorithms have significant

performance deficiencies for the very young and the very old. Further, there is

wide variation in performance between algorithms for populations in the 10 to 64

age range.

The IARPA JANUS program and other academic work is actively addressing the

challenges of Pose, illumination, expression and ageing (PIE-A). As the research

bears fruit the findings must be promptly incorporated into operational systems.

The challenges of limited training data must be actively addressed to counter

the advantages our adversaries are busily exploiting.

There are several schools of thought about what constitutes best performance for

imposter detection, especially within the very challenging same gender, same age

group, same country of birth cohort, where some would focus upon performance for

problematic age and gender cohorts and others would focus upon minimizing the

maximum adverse cross match rate for all cohorts. We will defer further

examination of impostor detection to later publications.

Summary

The current state of face recognition when deployed responsibly is proven to

have unparalleled utility for understanding identity. Given the magnitude of

data that law enforcement and intelligence personnel must analyze, FRT must be

characterized as a strategic technology for our Nation. Recent algorithm tests

indicate that China and Russia are outperforming U.S. and our allies on FRT.

However, results that look exclusively at algorithm performance do not provide a

full picture of operational considerations for FRT applications. With the full

complement of these operational FRT considerations, the Chinese and Russian

leads become less acute, but this is a wake-up call for FRT developers in the

U.S. and our allies. With less privacy protections afforded in many countries

outside of the U.S., it is a distinct possibility that our adversaries will

continue to improve technologies such as face recognition at a faster pace than

the United States and our allies.

To begin addressing this, the U.S. Executive Branch should make anonymized data

available to industry, perhaps with controls on use, a prohibition on further

dissemination, and an inspection regimen, for systems development. Countries

with little or no privacy protections can, and do, make vast repositories of

individuals’ images, along with the associated metadata, available to their FR

developers for algorithm improvement using AI technologies. Similar

repositories, with needed metadata, are not available to commercial developers

in the United States, or most of our allies. The cost to create such

repositories is well beyond the resources of most FRT providers. At the same

time, the Privacy Act of 1974, as currently interpreted, prevents government

from sharing even anonymized extracts from operational systems. The United

States, and most of our allies, will never disregard the privacy interests of

our citizens. However, absent names, locations, event histories and like

information it is infeasible to associate biometrics, age, gender, and

demographic metadata with specific individuals in very large datasets.

The disruption that could potentially result from not knowing identities

especially in this era of data explosion deserves careful attention with respect

to our national security. As the epic war of ‘hide and seek’ continues to

evolve, it is imperative that appropriate strategic investments are aligned with

legal, policy and best-practice guidelines to support the responsible, effective

and efficient utilization of FRT to detect threats immediately and verify

identities immediately, and with high accuracy.

Article originally published on

biometricupdate.com

More News from FaceFirst

Join FaceFirst at NRF Protect to See How We’re Putting an

End to ORC

Peter Trepp to Speak at RetailX About In-Store

Personalization with Face Recognition

|